The name flows from “Google Brain”, which is an artificial intelligence research group at Google where the idea for this format was conceived.

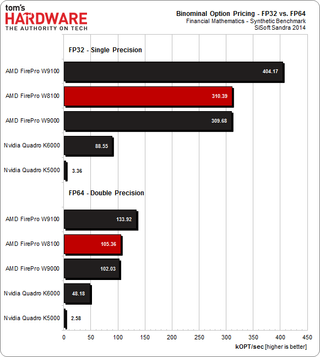

Right now well-supported on modern GPUs, e.g. Was poorly supported on older gaming GPUs (with 1/64 performance of FP32, see the post on GPUs for more details).Not supported in x86 CPUs (as a distinct type).Supported in TensorFlow (as tf.float16)/ PyTorch (as torch.float16 or torch.half).Otherwise, can be used with special libraries. Currently not in the C/C++ standard (but there is a short float proposal).Other formats in use for post-training quantization are integer INT8 (8-bit integer), INT4 (4 bits) and even INT1 (a binary value). Can be used for post-training quantization for faster inference ( TensorFlow Lite).Can be used for training, typically using mixed-precision training ( TensorFlow/ PyTorch).Additional precision gives nothing, while being slower, takes more memory and reduces speed of communication. There is a trend in DL towards using FP16 instead of FP32 because lower precision calculations seem to be not critical for neural networks.Range: ~5.96e−8 (6.10e−5) … 65504 with 4 significant decimal digits precision. Another IEEE 754 format, the single-precision floating-point with: The format that was the workhorse of deep learning for a long time. Among recent GPUs with unrestricted FP64 support are GP100/102/104 in Tesla P100/P40/P4 and Quadro GP100, GV100 in Tesla V100/Quadro GV100/Titan V and GA100 in recently announced A100 (interestingly, the new Ampere architecture has 3rd generation tensor cores with FP64 support, the A100 Tensor Core now includes new IEEE-compliant FP64 processing that delivers 2.5x the FP64 performance of V100).

Most GPUs, especially gaming ones including RTX series, have severely limited FP64 performance (usually 1/32 of FP32 performance instead of 1/2, see the post on GPUs for more details).Supported in TensorFlow (as tf.float64)/ PyTorch (as torch.float64 or torch.double).On most C/C++ systems represents the double type.The format is used for scientific computations with rather strong precision requirements.

0 kommentar(er)

0 kommentar(er)